Episode 95 Gavin Kearney & Helena Daffern (AudioLab, University of York)

This episode is sponsored by HHB Communications, the UK’s leader in Pro Audio Technology. For years HHB has been delivering the latest and most innovative pro audio solutions to the world’s top recording studios, post facilities, and broadcasters. The team at HHB provide best-in-class consultation, installation, training, and technical support to customers who want to build or upgrade their studio environment for immersive audio workflow. To find out more or book a demo at their HQ facility visit www.hhb.co.uk

Summary

In this episode of the Immersive Audio Podcast, Oliver Kadel is joined by Professors Gavin Kearney and Helena Daffern from the AudioLab at the School of Physics Engineering and Technology at the University of York, UK.

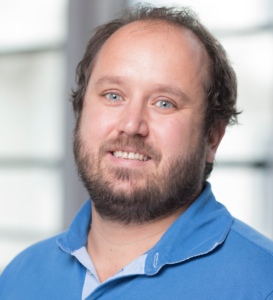

Gavin Kearney is a Professor of Audio Engineering at the School of Physics Engineering and Technology at the University of York. He is an active researcher, technologist and sound designer for immersive technologies and has published over a hundred articles and patents relating to immersive audio. He graduated from Dublin Institute of Technology in 2002 with an honours degree in Electronic Engineering and has since obtained both MSc and PhD degrees in Audio Signal Processing from Trinity College Dublin. He joined the University of York as a Lecturer in Sound Design at the Department of Theatre, Film and Television in January 2011 and moved to the Department of Electronic Engineering in 2016. He leads a team of researchers at York Audiolab which focuses on different facets of immersive and interactive audio, including spatial audio and surround sound, real-time audio signal processing, Ambisonics and spherical acoustics, game audio/audio for virtual and augmented reality and recording and audio post-production technique development.

Helena Daffern is currently a Professor in Music Science and Technology at the School of Physics Engineering and Technology at the University of York. Her research utilises interdisciplinary approaches to investigate voice science and acoustics, particularly singing performance, vocal pedagogy, choral singing and singing for health and well-being. Recent projects explore the potential of virtual reality to improve access to group singing activities and as a tool for singing performance research. She received a BA (Hons.) degree in music, an M.A. degree in music, and a PhD in music technology, all from the University of York, UK, in 2004, 2005, and 2009. She went on to complete training as a classical singer at Trinity College of Music and worked in London as a singer and teacher before returning to York. Her research utilises interdisciplinary approaches to investigate voice science and acoustics, particularly singing performance, vocal pedagogy, choral singing and singing for health and well-being. Recent projects explore the potential of virtual reality to improve access to group singing activities and as a tool for singing performance research.

Helena and Gavin talk about the recently announced CoSTAR project – the initiative focuses on leveraging a novel R&D in virtual production technologies including CGI, spatial audio, motion capture and extended reality to create groundbreaking live performance experiences.

Listen to Podcast

Show Notes

Gavin Kearney Linkedin – https://www.linkedin.com/in/gavin-p-kearney/?originalSubdomain=uk

Helena Daffern Linkedin – https://www.linkedin.com/in/helena-daffern-32822439/?originalSubdomain=uk

AudioLab – https://audiolab.york.ac.uk/

University of York – https://www.york.ac.uk/

CoSTAR Project – https://audiolab.york.ac.uk/audiolab-at-the-forefront-of-pioneering-the-future-of-live-performance-with-a-new-rd-lab/

BBC Maida Vale Studios – https://www.bbc.co.uk/showsandtours/venue/bbc-maida-vale-studios

AudioLab goes to BBC Maida Vale Recording Studios – https://audiolab.york.ac.uk/audiolab-goes-to-bbc-maida-vale-recording-studios/

Project SAFFIRE – https://audiolab.york.ac.uk/saffire/

Our Sponsors

Innovate Audio offers a range of software-based spatial audio processing tools. Their latest product, panLab Console, is a macOS application that adds 3D spatial audio rendering capabilities to live audio mixing consoles, including popular models from Yamaha, Midas and Behringer. This means you can achieve an object-based audio workflow, utilising the hardware you already own. Immersive Audio Podcast listeners can get an exclusive 20% discount on all panLab licences, use code Immersive20 at checkout. Find out more at innovateaudio.co.uk *Offer available until June 2024.*

HOLOPLOT is a Berlin-based pro-audio company, which features the multi-award-winning X1 Matrix Array. X1 is software-driven, combining 3D Audio-Beamforming and Wave Field Synthesis to achieve authentic sound localisation and complete control over sound in both the vertical and horizontal axes. HOLOPLOT is pivoting the revolution in sound control, enabling the positioning of virtual loudspeakers within a space, allowing for a completely new way of designing and experiencing immersive audio on a large scale. To find more, visit holoplot.com.

Survey

We want to hear from you! We really value our community and would appreciate it if you would take our very quick survey and help us make the Immersive Audio Podcast even better: surveymonkey.co.uk/r/3Y9B2MJ Thank you!

Credits

This episode was produced by Oliver Kadel and Emma Rees and included music by Rhythm Scott.

Summary

Summary